Peruse any news media outlet and you’ll probably find an article touting or warning about the potential impacts of artificial intelligence (AI) on society. With the advent of publicly accessible generative AI models like ChatGPT, the field is having its moment in the sun like never before.

But underlying the chatbots, facial and image recognition technology, predictive text and other technologies is a subdiscipline of AI called machine learning. Computer scientist and machine learning pioneer Arthur Lee defined this field as the “study that gives computers the ability to learn without explicitly being programmed.”

“Machine learning is a part of AI where you develop mathematical and statistical algorithms to get information out of data to detect patterns in the data,” said Thomas Strohmer, professor in the Department of Mathematics and director of the UC Davis Center for Data Science and Artificial Intelligence Research.

2024 Physics Nobel for Machine Learning

The 2024 Nobel Prize for Physics was awarded to John Hopfield and Geoffrey Hinton for their pioneering work that led to the development of machine learning and AI technology.

Take image recognition technology, for example. Say you want to create a machine learning algorithm that can differentiate between a cat and a dog. In essence, you’d feed the machine learning algorithm a large dataset of images of cats and dogs — the inputs — and then the answers for each image — the outputs. Based on those two things, the algorithm will learn how to differentiate between the two creatures. The more data the computer is provided, the better its predictive power and answers.

Scientists have applied this facet of machine learning to a host of research areas. At the College of Letters and Science at UC Davis, researchers are using the power of machine learning to help protect us from the next pandemic, discover and build new materials, and explore the myriad galaxies in the heavens above.

“There are many grand challenges that our society is facing,” Strohmer said. “There’s a belief that data science and machine learning will play a key role in addressing these problems, not only as a means to solve them but as a key ingredient to get to solutions.”

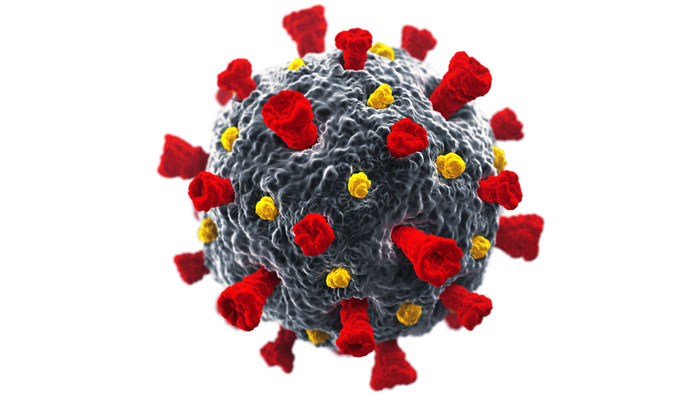

Understanding the coronavirus mutational landscape

In the early days of the COVID-19 pandemic, an interdisciplinary team of UC Davis researchers received a $200,000 RAPID grant from the National Science Foundation to track the evolution of the novel coronavirus.

Contending with a virus and its spread is like an arms race. As viruses jump from host to host, they accumulate mutations, allowing them to spread further. Vaccines provide immunity but only against the specific strain they’re designed to combat. That’s why coronavirus and flu vaccines are, ideally, updated seasonally.

To get ahead of the next pandemic, UC Davis researchers took a proactive approach by using machine learning to study the coronavirus family. The hope was to identify non-human animal coronaviruses that have the potential to bind to human cells.

Georgina Gonzalez, an assistant specialist in the Arsuaga-Vazquez Lab at UC Davis, was on the frontlines of this research. A statistician by training, Gonzalez had been applying neural networks, a machine learning model that mimics the human brain, to a variety of research areas in the lab when the grant funding for the coronavirus project came through.

Gonzalez trained a neural network model on large datasets of coronavirus genetic sequences from various sources, including the National Library of Medicine. Following the training, the neural network was capable of producing a human-binding potential score for non-human animal coronaviruses. Human-binding potential refers to the probability that the virus’ spike protein can bind to human cells. The team then ran molecular dynamics simulations to visualize how such binding could occur.

“We discovered three viruses that were not classified in the past as potentially infectious,” Gonzalez said. “It was super exciting because I’ve been working with these models for a long time, and sometimes you have the data but they don’t work.”

To validate their findings, the team performed a sort of experimental retrospective on SARS-CoV-2, the strain responsible for the pandemic.

“We removed all the information about SARS-CoV-2 from the model and sort of went back in time and used this method,” Gonzalez said. “Using this model, would we be able to predict that SAR-CoV-2 was infectious to humans? And the answer was yes.”

“The resulting tool from the model enables researchers to identify potential new human coronaviruses within seconds before moving on to more costly or time-consuming approaches,” Gonzalez added.

The tool, which the team named h-BiP, can make these predictions based solely on the genomic sequence of the virus’ spike protein. The team published their findings in Scientific Reports.

To see if the computational findings translate to the real world, the Arsuaga-Vazquez Lab has partnered with Assistant Professor Priya Shah, Department of Chemical Engineering and Department of Microbiology and Molecular Genetics, to take their research to the wet lab environment.

“Shah has expressed and purified two of those proteins and we need to do the binding assays to validate our predictions,” said Javier Arsuaga, one of the principal investigators of the Arsuaga-Vazquez Lab and a professor of molecular and cellular biology, and of mathematics. “Biology has definitely become a data science and you can produce so much data in a single experiment. But machine learning can help you cluster data and make sense of it, allowing you to generate hypotheses that can be further tested."

Simulations for materials science

Biology isn’t the only science bolstered by the power of machine learning. The field of materials science is also benefiting from the technological development. Especially when it comes to developing and improving the efficiency of advanced transistors in computing hardware.

“Molecules and atoms in a material are not at rest; they are always moving,” said Davide Donadio, a professor in the Department of Chemistry. “The movement of atoms is what dictates several properties of materials, for example their mechanical behavior and the ability of conducting heat.”

Like the research from the Arsuaga-Vazquez lab, Donadio’s research relies on molecular dynamics simulations to visualize this atomistic movement. But at such a fine scale, the atomic motion underlying thermal transport occurs rapidly, on the order of one-billionth of a second, or a nanosecond. Machine learning and neural networks are helping Donadio and his colleagues reveal the chemical and thermal happenings occurring at this timescale in various contexts, from environmental processes to energy and electronic materials and devices.

“If you look at my first papers with neural networks, we were simulating 1000 atoms, 4000 atoms,” said Donadio, who started working with neural networks around 2008. “Now I have a project that is funded by the Department of Defense where we are simulating millions of atoms in a full transistor for several nanoseconds. We’re simulating the thermal flow when you switch a transistor on and off, which is essential for the development of new architectures for faster and more efficient computing processors.”

The neural networks developed by Donadio and his colleagues allow them to simulate the dynamics of the atoms and the energy flows that occur when a transistor is switched on or off with quantum accuracy.

“The development of faster computers or faster computing tools is strategic, and one of the main hindrances to the development of new architectures is how heat is managed and dissipated,” he added. “Using AI in this project, we are developing a multi-scale simulator that can be used without calibrating it on experiments, thus making it a faster and more cost-effective model.”

Currently, the theoretical work has been benchmarked on the commercially available Intel 16 transistor. According to Donadio, the chemical diversity of the system — the various materials used in its creation — requires the power of machine learning and neural networks to model how heat propagates within the system and to nearby transistors.

Since 2021, the Department of Energy has provided continuous support to Donadio and his colleagues for research on AI-driven materials discovery. The team recently received a $1 million award for such a project.

“Machine learning and neural networks are enabling things that wouldn’t be possible in any other way. They’re enabling simulations of complex systems,” Donadio said.

The machine learning eye in the sky

Cast your eyes toward the sky and you’ll find yourself in the company of astronomers using this subdiscipline of AI to explore our cosmos.

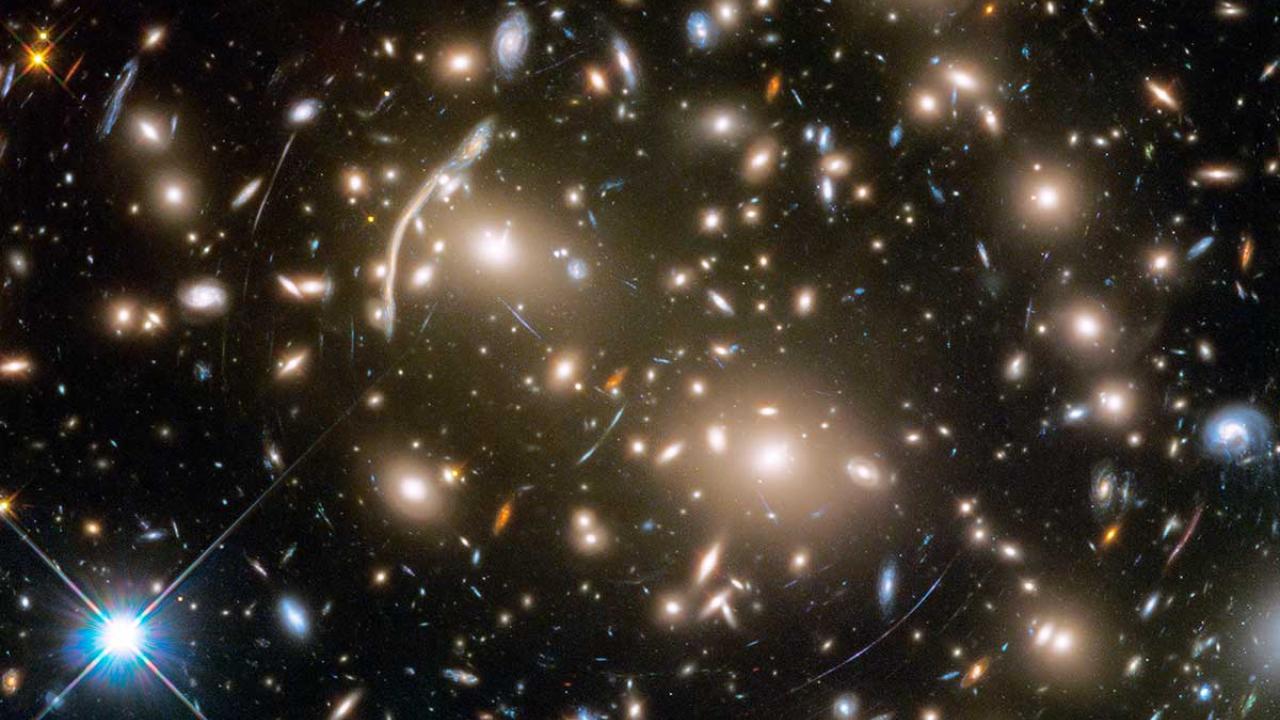

Tucker Jones, a professor of physics and astronomy at UC Davis, is concerned with the deep history of the universe. He studies galaxy formation and evolution. High-powered telescopes are his tools, but Jones additionally relies on the natural magnification of celestial objects called gravitational lenses to aid in discovery.

“A gravitational lens can basically magnify the size and brightness of distant objects and even give us multiple images of those objects,” Jones said in a previous interview. “The issue is these lensing configurations are very rare, because what you need is a large concentration of mass — which means a big galaxy or cluster of galaxies — with another galaxy, or object of interest, directly behind it."

Gravitational lenses are so rare that it’s thought that only one in every 10,000 massive galaxies exhibits lensing characteristics, according to the National Science Foundation. Finding such “needles in a haystack,” as Jones refers to them, is a cumbersome task for any human.

With the aid of machine learning, scientists are identifying gravitational lens candidates at an unprecedented rate. Jones and his former graduate student, UC Davis alum Keerthi Vasan, were part of an international team of astronomers that identified nearly 5,000 potential gravitational lenses in recent years. Jones and Vasan initially investigated 77 of those lens candidates, confirming that 68 were strong gravitational lenses. The findings were published in The Astronomical Journal. Jones’ team is currently working on research confirming more of these lenses.

Such gravitational lens-identifying, machine learning algorithms are trained on extant galaxy and gravitational lens imagery. But simulated gravitational lens imagery also play a key role in the dataset.

“The idea is that the more input you have, the better the results. The number of known lenses is on the order of 1,000 and that’s nowhere near the number you want to train a machine learning algorithm. We wanted to train it on of order 100,000 images, so we had to simulate and make our own,” Jones said.

For Jones and his lab, gravitational lens identification is the first step towards exploring other research questions about galaxy formation and evolution.

“When we look at these lensed galaxies, we’re seeing 10 billion years into the past, when they are forming the majority of their stars,” Jones said.

By harnessing the power of these cosmic tools, Jones and his colleagues can view distant galaxies at a greater spatial resolution, giving them a better view into their internal structure and composition.

“Keerthi was able to study one of these spectacularly lensed galaxies in phenomenal detail and show that the amount of mass being ejected out of it is greater than the amount of mass actually turning into stars,” said Jones, providing a recent example of how gravitational lenses are aiding further research.

“What I’m excited about is the possibility of discovery with these tools,” he said. “What else is out there waiting to be discovered?”

Humans working with machines

While the media attention around AI may sound alarm bells for some, Strohmer is adamant that the technological development is a tool to be harnessed by humans rather than a replacement. Tools like generative AI, according to Strohmer, are useless without the human component.

“There’s something called the infinite monkey theorem, which says that if you sit a monkey at a typewriter and it starts typing randomly for an infinite amount of time, eventually it will recreate the works of Shakespeare randomly, but it will also produce so much garbage, so much nonsense, that it will take us an infinite amount of time to sort through all that garbage,” Strohmer said. “Generative AI can create a lot of things, but a lot of it is useless and sorting through it can be extremely time-consuming.”

For generative AI to be useful for scientific discovery, computer scientists and mathematicians have to design it in close interaction with domain experts like chemists and physicists, otherwise we often will end up with essentially useless results, Strohmer said.

"To give an example, if we design generative AI for molecular modeling, we need to ensure that whatever it spits out is meaningful in the context of the problem,” he said.

Such thought in design will help researchers refine models to ensure they produce less “garbage” and more scientifically worthwhile results that address research goals.

When asked about the “intelligence” component of “artificial intelligence,” Strohmer shifted the conversation to poetry.

“These large language models can write poems, but for me, intelligence is more than that,” he said. “It’s not just being able to write a poem but wanting to write the poem. That quality is very different, that’s a quality of creativity that’s human.”

That human quality of creativity extends to scientific discovery as well.

Media Resources

Adapted from an original artlcle, part of a series published by the College of Letters & Science magazine.

Also in this series: AI: A Tectonic Shift in Human Society

Greg Watry is a writer with the College of Letters & Science.